Who is watching the Practical Data Quality Metrics Dashboard?

Practical data quality metrics are just the ones you would think of with minimal effort. However, the real question isn’t which metrics or key performance indicators (KPIs) you should measure. Instead, it is whether you are measuring any metrics at all, and then letting your senior management know about the results. Why bother? Bad data costs money!

Bad Data Costs Money

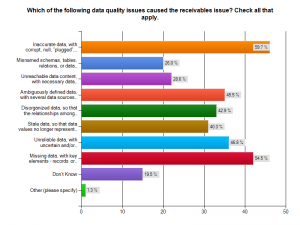

Our “Poor Data Quality – Negative Business Outcomes” survey asked participants to monetize negative business outcomes such as lost customers, lost up-sell opportunities, excessive inventory levels, and similar consequences that could be attributed to poor data quality. Over 350 people have already participated. Here are the responses about negative business outcomes from poor quality accounts receivable data. Click to enlarge the graphic.

Almost 60% identified inaccurate data, with corrupt, null, “plugged”, or wrong values. Nearly 55% selected missing data, with key elements such as records or fields not completed, and nearly 48% believed the problem lay in unreliable data, with uncertain and/or suspect data lineage. More than 22% of those respondents believed they might have improved their accounts receivable results by 6-10%.

Almost 60% identified inaccurate data, with corrupt, null, “plugged”, or wrong values. Nearly 55% selected missing data, with key elements such as records or fields not completed, and nearly 48% believed the problem lay in unreliable data, with uncertain and/or suspect data lineage. More than 22% of those respondents believed they might have improved their accounts receivable results by 6-10%.

Here is an example of a publicly traded company, to put that savings into context. According to Marketwatch.com, General Motors had a 3rd quarter 2013 accounts receivable of $325M USD. A 10% improvement would have reduced that receivables amount by $32.5M USD. In four quarters, that is nearly $100M USD. I would invest in improving my receivables with that kind of return, wouldn’t you?

The Simplest Metrics

We asked survey respondents to monetize the costs of poor data quality and identify the reasons for that poor quality. However, we didn’t ask how they drew their causal conclusions. Did the respondents have practical data quality metrics at hand?

- Did a respondent know their customer data was poor because they tracked the frequency that data like emails, phone numbers, and mailing addresses resulted in rejects?

- How about manual reconciliations? Had someone tracked the frequency of manual fix-ups applied to a set of data? Was there a record of work-hours applied to filling in missing data values, or re-entering data that was simply and clearly wrong?

- What about duplicated data? Did a respondent record the number of complaints received about duplicate emails or snail mails?

We will gather information on how respondents identified the causes of poor data quality when we begin our interview process this December. Prediction # 1 is that most respondents used a gut-feel, water cooler discussion approach to identifying the causes of poor data quality, and did not have practical data quality metrics to guide them. I look forward to being proved wrong on this.

Telling the Big Dogs the Bad News

If the survey results are right, organizations are taking some big pocketbook hits from poor data quality. If the right executives knew about these excess costs, and the reasonable costs of remediation and subsequent elimination of poor data quality, chances are the problems would already be fixed.

data quality. If the right executives knew about these excess costs, and the reasonable costs of remediation and subsequent elimination of poor data quality, chances are the problems would already be fixed.

Prediction # 2 is that without good practical data quality metrics, no one is willing to ring the data quality alarm bell and warn the folks in command that there is a problem. Armed with convincing evidence of problems and associated costs, I think it likely that the data mess would get cleaned up. I’d be very interested in being proved wrong on this prediction, and even more interested in knowing why problems were documented and reported, but no corrective actions were authorized and implemented.

The Bottom Line

Poor data quality costs organizations money and opportunity. Our survey confirms this across the spectrum of industries and business functional areas. An open question remains. Why aren’t these problems receiving attention and correction? I’m predicting that the data quality problems are not being measured and recorded, or that the measurement and recording does not occur in an effective way. Therefore, without such documented evidence, no one is making a strong case for the big fix. Our survey respondent interview process should shed some light on this topic.

Until next time, thanks much to the IBM InfoGov Community, The Robert Frances Group, and Chaordix, with whom I have been running our Poor Data Quality – Negative Business Outcomes survey.

Comments are closed.