What does Measuring Information Confidence Mean to You?

When you read the phrase “Measuring Information Confidence”, what does your gut tell you? Are you thinking about what gives you confidence in the information you use for decision-making? Could you quantify your thoughts? Are you considering the fundamental elements of data quality, like whether information is accurate, and named so it is likely that you are looking at the right information?

Would your decision-making confidence be shaken if the information that informed a decision was not clearly defined, or not current? Would you delay or avoid making decisions based on data you thought was dodgy? How would you quantify your need to feel confident in the information you use for decision-making? Do you ever ask yourself these questions? Here are a few thoughts on measuring information confidence.

Possible Information Confidence Gap

Our “Poor Data Quality – Negative Business Outcome” survey respondents have told us  that they experience excessive costs, lost sales opportunities, and dissatisfied customers because their information quality is sub-par.They went further and identified the reasons for sub-par quality, and monetized the negative results.

that they experience excessive costs, lost sales opportunities, and dissatisfied customers because their information quality is sub-par.They went further and identified the reasons for sub-par quality, and monetized the negative results.

However, now we don’t know whether they were confident in their flawed data, and were led down the wrong path, or whether they had no confidence in their data and avoided making decisions that should have been easy to make. Perhaps decision-makers ignored their data and made “educated guesses”. We will learn more about this during our survey participant interview phase, and let you know what we find.

Information Confidence Criticality Level

In the abstract, every decision we make about customers, inventory levels, or strategic planning is not of equal importance. For any given decision, each element of information on which the decision is conditioned will have greater or lesser importance. Some decisions are critical while others are incidental. The same is true for the information that informs such decisions, and for the confidence we have in the quality of that information.

In the abstract, every decision we make about customers, inventory levels, or strategic planning is not of equal importance. For any given decision, each element of information on which the decision is conditioned will have greater or lesser importance. Some decisions are critical while others are incidental. The same is true for the information that informs such decisions, and for the confidence we have in the quality of that information.

Ideally, the most critical decisions would be based on information in which the decision-maker had the greatest confidence. How much attention to information quality should be placed on this data set or that one? How should we rate information criticality and the degree of confidence in information that critical decisions demand? I think an information confidence criticality level would capture the notion of information quality dependency, in a simple way.

Let me borrow from the world of software testing, where the concept of “Software Integrity Level” or SIL is used to bound the scope and objectives of software testing based on how critical the software under test is, and how severe an outcome would result from the failure of that software. Mutatis Mutandis, here is my “Information Confidence Integrity Level” scale.

I decided on a 5 level scale, where Level 5 would demand extremely high information confidence to avoid possibly causing physical harm or death. As the decisions made with this information are critical, the investment made in people, process, and technology, to ensure the information was accurate, current, and easy to identify, would be commensurately great. You get the idea. Of course, you can develop your own scale to suit your purposes. The idea is to think about information criticality when you think about information quality. Criticality should drive investment in data quality.

Information Confidence Integrity Level Scale

For any decision, ask yourself which one of these list assertions would be true if you made a mistaken decision based on poor quality data. You should get an idea of the degree of investment and effort necessary to ensure you make that decision with high quality data.

a mistaken decision based on poor quality data. You should get an idea of the degree of investment and effort necessary to ensure you make that decision with high quality data.

5 – Will cause personal physical harm or death

4 – Will cause direct material losses and/or violate laws or regulations

3 – Will violate business policies

2 – Will cause direct nonmaterial financial losses for stakeholders

1 – Will annoy or confuse stakeholders

It appears that most of our survey participants identified level 1, 2, and 3 gaps in their information confidence. Interviews will give us greater insight.

Making a decision – Are you good to go?

Let’s say that you are making a Level 4 decision. You don’t want to violate laws and regulations. So how good must your underlying data be to give you confidence that you can make that decision? Perhaps it would be useful to analyze your data to determine what percentage of the data is correct. You might feel comfortable making Level 4 decisions if 95% of the data on which you based your decision was correct. Organizational governance might specify a range of quality levels suggested or required for decisions of particular criticality. Maybe a Level 5 (life and death) decision would demand “five nines” quality. So, the idea is…the degree of decision criticality should influence the required level of data quality, which should be regularly assessed.

On the Receiving End

What if you are the victim of poor information quality? Think about a “clutch” situation demanding data quality consistent with Information Confidence Integrity Level 5. What happens when the information quality is wanting? Nothing good.

Flying by the seat of your pants?

Many of us fly for business. Probably more than we would like. We trust the FAA, airlines, our flight crews, and their instruments to takeoff and land safely. What if the pilot’s instrument panel reported inaccurate information in flight? Suppose the pilots became confused, and had no confidence in information reported to them. What should the pilots do? Would they make the right moves? In the case of Air France flight 447 that crashed killing all aboard, the pilots did not make sense out of apparently wrong information. You can read about the analysis of the “black box” recordings here.

Many of us fly for business. Probably more than we would like. We trust the FAA, airlines, our flight crews, and their instruments to takeoff and land safely. What if the pilot’s instrument panel reported inaccurate information in flight? Suppose the pilots became confused, and had no confidence in information reported to them. What should the pilots do? Would they make the right moves? In the case of Air France flight 447 that crashed killing all aboard, the pilots did not make sense out of apparently wrong information. You can read about the analysis of the “black box” recordings here.

The computers, which are programmed not to feed pilots misleading information, could no longer make sense of the data they were receiving and blanked out some of the instruments. Also, the stall warnings ceased.

… something bewildering happened when [the pilot] put the nose down. As the aircraft picked up speed, the input data became valid again and the computers could now make sense of things. Once again they began to shout: “Stall, stall, stall.” … panicked by the renewed stall alerts, [the pilot] chose to resume his fatal climb.

Let’s hope you never face a situation where your flight crew doubts the validity of the information they receive from their aircraft, and becomes badly confused.

Hoping the Docs got it right?

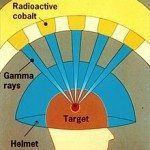

The Gamma Knife is a special medical device that treats otherwise inoperable brain lesions. It releases beams of gamma rays from many points selected to converge precisely on the lesion to be inactivated. Not surprisingly, the information on where to converge the many (200+) possible beams is of critical importance. Wikipedia’s page on the Gamma Knife reminds us…

The Gamma Knife is a special medical device that treats otherwise inoperable brain lesions. It releases beams of gamma rays from many points selected to converge precisely on the lesion to be inactivated. Not surprisingly, the information on where to converge the many (200+) possible beams is of critical importance. Wikipedia’s page on the Gamma Knife reminds us…

Although technological improvements in imaging and computing, increased availability and clinical adoption have broadened the scope of radiosurgery in recent years,[3][4] the localization accuracy and precision implicit in the word “stereotactic” remains of utmost importance for radiosurgical interventions today.

If your brain were the target receiving the Gamma Knife treatment, you would want the radiologists to have absolute confidence that the measurements they took to for localization were spot on. This is Information Confidence Criticality Level 5 for certain.

Poor Data Quality – Negative Business Outcome Update

However confident our survey participants have been in their information quality, our survey has been in flight for just over three months. With great support from the IBM InfoGov Community, The Robert Frances Group, and Chaordix, we have been running a Poor Data Quality – Negative Business Outcomes survey. Thus far, we have learned of real and substantial costs to poor data quality, and that business executives see higher costs than do their IT executive counterparts.

Reported Causes of Negative Business Outcomes have Stabilized

With over 350 survey responses in progress at this writing, the reasons behind poor data quality seem to have stabilized. How have results evolved over time?.

[table id=2 /]

So, inaccurate, unreliable, ambiguously defined, and disorganized data problems topped the list of problems in August. By October, inaccurate, ambiguously defined, unreliable, and disorganized were still the leading problems. At this writing the biggest problems have remained the same. Surely these data problems must cause an Information Confidence Gap among decision-makers, and among stakeholders.

The Bottom Line

Measuring information confidence as I’ve proposed it remains art and not science. However, I believe it would be possible to develop a rule-based approach to assigning an Information Confidence Level, by assessing the degree of Information Confidence you need in light of the decisions made, actions taken, and downstream consequences realized by applying your data to these tasks. Contact us, and let us know what you think.

Comments are closed.