Rome and our “Poor Data Quality” survey weren’t built in a day.

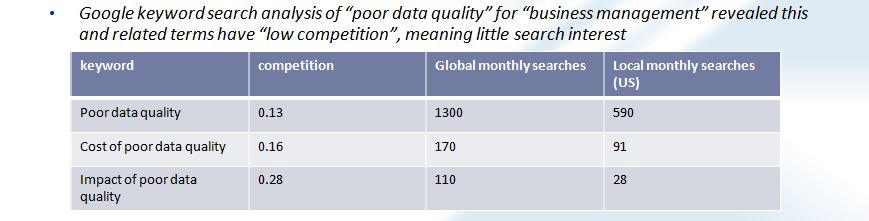

In my last blog post about poor data quality, I noted that in recent days, little had been published about poor data quality. In fact, a small experiment with Google AdWords indicated that “Poor Data Quality” and related terms had Low Value. Here are the results of that little test.

Poor Data Quality Considered Harmful to Business Outcomes!

Yes, that’s the thesis that Cal Braunstein, CEO of the Robert Frances Group, and I presented to the InfoGov Community, last Friday. Of the 3000+ members, about 30 were on the conference call, and we received excellent feedback and constructive suggestions about the form of our survey questions.

The Challenge of Creating Survey Questions

We seek insight into the business costs of poor data quality by asking survey respondents to monetize the losses, costs, or reduced benefits they experienced due to poor data quality. However, that is easier said than done.

One challenge is finding respondents with a business-wide, or line of business-wide view into the costs of poor data quality.

Another challenge is finding respondents who are willing to admit that their organization’s poor data quality has had costs for their business and/or themselves.

A third challenge is constructing our survey questions so that we are able to compare results across business sizes and industries. You can help us with this challenge!

A One-Question Survey for You…

Here are two question approaches we are considering.

Percentage Approach

In your opinion, did poor data quality cause you to lose some or all of a client’s business (failed to “know your customer”, caused inaccurate or inconsistent transaction or position reporting)? If so, what percentage of revenues were lost that is attributable to poor data quality ( 1-5%, 5-10%,10-20%, >20%, >50%, >80%, 100%)?

Dollar-ized Approach

In your opinion, did poor data quality cause you to lose some or all of a client’s business (failed to “know your customer”, caused inaccurate or inconsistent transaction or position reporting)? If so, how much revenue, in US Dollars (USD) do you believe was lost due to poor data quality? ( $< 1M, $1M-$10M, $10M-$50M, $50M-$100M, >$100M))?

Percentages might be easier to “guestimate” but comparisons across businesses of different sizes becomes difficult. Dollar amounts are easier to compare, but respondents may not have that information, especially if their view is of a department or other organizational unit that does not publish that information.

Which question (percentage or dollar amount) would you find easier to answer? Please comment on this post and let us know!

What do we mean by “Poor Data Quality”?

When we speak of poor data quality, we mean data that is:

- Inaccurate, with corrupt, null, “plugged”, or wrong values,

- Misnamed, so that finding the authoritative data source is error-prone or impossible

- Unreachable, with necessary data locked inside inaccessible application silos,

- Ambiguously defined, with data sources having conflicting data definitions,

- Disorganized, so that the relationships among the data elements and structures is obscure

- Stale, so that data values no longer represent the facts and relationships in the physical world

- Unreliable, with uncertain and/or suspect data lineage

-

Missing and/or incomplete, with some values absent or “plugged”

Note that for our purposes, poor data quality does not mean that an additional data feed, data source, data integration tool, or other product would have meant superior results for an organization or an individual.

The Bottom Line

We received great feedback from the InfoGov community and appreciate their participation in our conference call presentation. Shortly, we will present our revised survey approach to another community meeting. Our revisions will reflect InfoGov input, and any responses about survey questions that you, the reader, may contribute through comments to this post. We look forward to hearing from you!

A survey like this is long overdue.